Roman Geus and Peter Arbenz

Swiss Federal Institute of Technology Zurich

Institute of Scientific Computing

mailto:geus@inf.ethz.ch

mailto:arbenz@inf.ethz.ch

Date: March 13, 2003

This paper describes our experience with the redesign and reimplementation of a large-scale application from accelerator physics using this mixed-language programming approach with Python and C.

The application code which was originally written in Fortran 90, with smaller parts written in C and Fortran 77, primarily solves eigenvalue problems and linear systems involving large sparse matrices [1]. We solved problems with matrix orders up to 7.5 million. The memory requirements of a single of these matrices is beyond 2 GBytes.

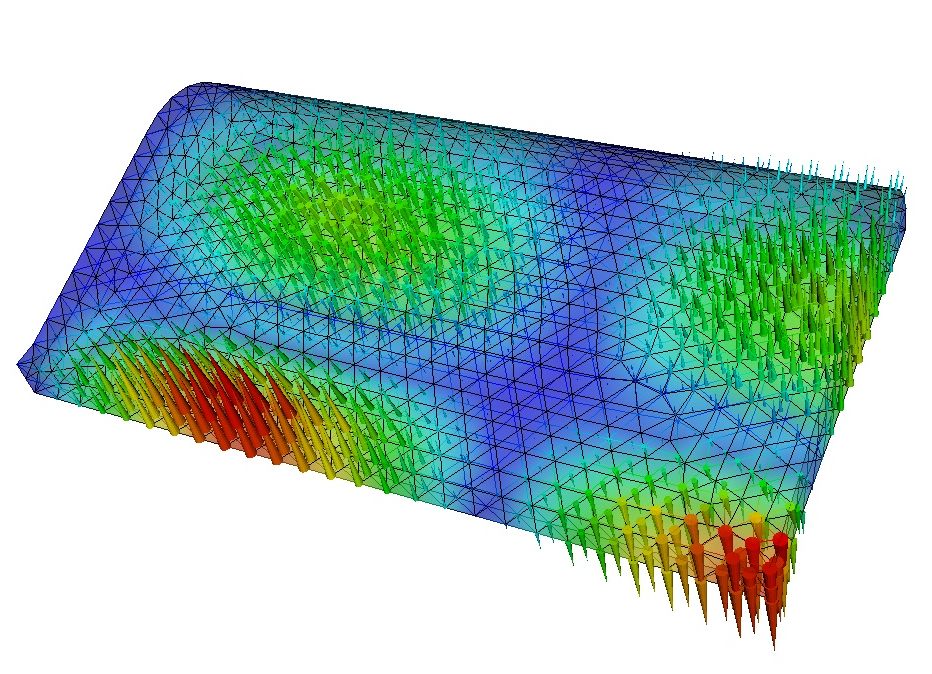

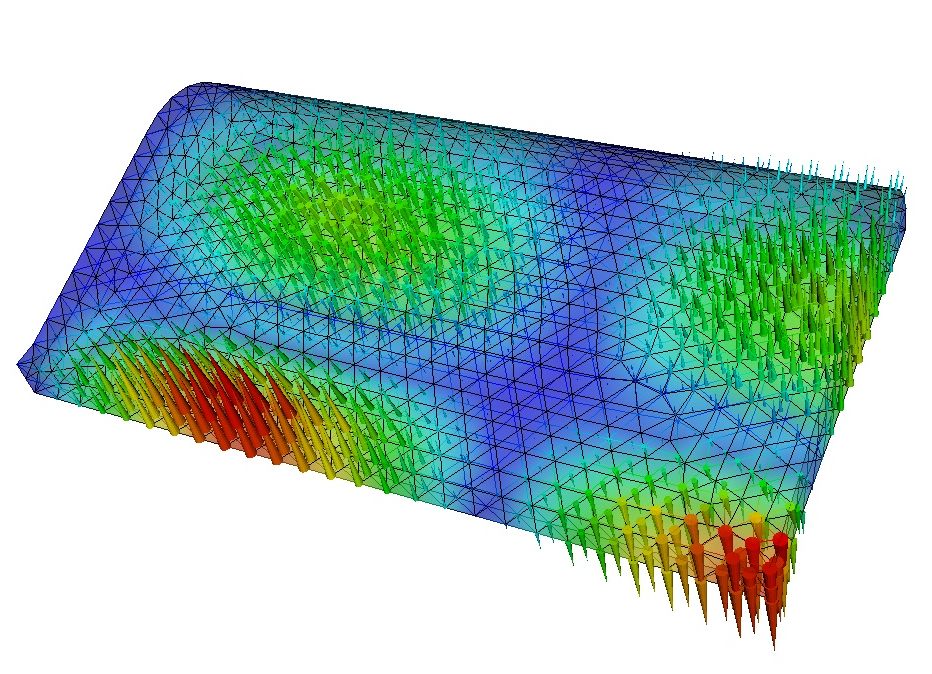

The paper also presents the two Python software packages: PySparse, for manipulating sparse matrices, and PyFemax, a finite element code for computing three-dimensional electro-magnetic fields in accelerator cavities.

In its final state, the original Fortran/C code was approximately 33'000 lines long. During its development time of approximately four years, the code became cluttered and was no longer well modularised, since many features were added that were not forseen in the beginning. The code was difficult to understand, maintain and extend.

The many features of the program were controlled by means of a parameter file containing over 100 different values to adjust. This approach that was handy initially turned out to be inflexible for the large amount of parameters.

The above reasons motivated us to redesign and rewrite the code. We had the following goals in mind:

In short: We wanted to have the simplicity of Matlab together with the performance of Fortran and C. In fact we wanted to have more than that, since Matlab lacks many language features desirable for building large applications.

Based on our previous experience with scripting languages, we found Python to be the language of choice for the redesign.

As an interpreted programming language, Python is ``out of the box'' not well suited for high-performance numerical applications. However, Python can easily be extended using modules written in C for performance-critical tasks.

We designed and implemented the PySparse package, which extends Python with new sparse matrix object types and some operations on them. The PyFemax package contains the application-specific code.

PySparse and PyFemax rely on the Numerical Python [2] package for storing and operating on dense matrices and vectors. PySparse is implemented using a highly optimised C code, while PyFemax is written almost completely in Python.

What we are calling the Python implementation is actually a set of modules which are implemented using a mixed-language programming approach: The application logic, the input/output routines and the finite element code are implemented in Python. On the other hand, the time-critical parts, like the sparse and dense linear algebra routines, including iterative solvers, preconditioners, sparse matrix factorisations and the eigensolver are implemented in C and are tightly integrated into the Python framework.

For writing the C extension modules, we used the Python modulator to generate skeletons which were then extended manually. This approach allowed us not only to have full control over the interface, but also to keep the calling overhead minimal and to use features not offered by automatic interface generators like SWIG.

Every Python object that has a shape attribute for returning the dimensions of a matrix and a matvec method for performing the matrix-vector multiplication can be passed as a matrix to PySparse routines.

Preconditioners have a shape attribute and also a precon method, that applies the preconditioner on one vector and stores the result in another vector. In analogy, a solver has a shape attribute and a solve method.

In this way, it possible to introduce e.g. a new preconditioner type without changing any of the existing library code in PySparse and PyFemax. Only the top level script, which creates objects of the new type needs to be adjusted.

A more elegant way to guarantee interoperability would be to design an appropriate class hierarchy, deriving all objects of a certain kind from a common abstract base class. With Python 2.2 this is not possible, since extension types cannot be used as base classes. However, there is an ongoing effort to do away with the differences between extension types and Python classes.

The modules that can be potentially used in a wide range of scientific applications have been placed in PySparse, while the more application-specific modules are part of PyFemax.

From the application user's point of view, other improvements are more important: The computation can now be steered by scripts, which usually consist of less than 30 lines of Python code. This offers exciting new flexibilities. An additional benefit is the fact that the computational structure is directly reflected in these scripts and thus becomes much more transparent.

One sparse matrix type (ll_mat) is designed for efficiently creating or modifying matrices. Another sparse matrix (csr_mat) type is designed for memory efficiency and fast row-by-row access to its elements, which is desirable for matrix-vector multiplication. PySparse can store symmetric matrices efficiently and provides conversion routines for the different formats.

PySparse also includes modules that implement

The code example below illustrates the use of the new sparse matrix types. The function poisson2d_sym_blk builds an ll_mat object, representing a sparse 2D-Poisson matrix of order n2. Notice that only the elements on and below the diagonal are stored. With two-dimensional indices, matrix elements and submatrices can accessed conveniently. The syntax is the same as the one used for NumPy's dense arrays types.

More information and further code examples for PySparse can be found in [1, Section 9.4] and [3].

More information and code examples for PyFemax can be found in [1, Section 9.5 and 9.6] and [3].

Since all objects of a given type (e.g. preconditioners) conform to the same standards, they are in fact interchangeable. Many algorithmic variants can be easily tested by combining existing objects.

In all these approaches, additional overhead is introduced by the Python interface. Since our algorithms only support a relatively fine-grained parallelism, it is not clear whether one of these approaches could lead to a successfully parallelised of the application.

The table below shows the execution times (in seconds) for several Python and Matlab functions generating 2D-Poisson matrices of different sizes. The Python and Matlab source codes and further details regarding this experiment can be found in [1, Section 9.4.1] and in Section 3.1.

| Function | n=1002 | n=3002 | n=5002 | n=10002 |

| Python poisson2d | 0.44 | 4.11 | 11.34 | 45.50 |

| Python poisson2d_sym | 0.26 | 2.34 | 6.55 | 26.33 |

| Python poisson2d_sym_blk | 0.03 | 0.21 | 0.62 | 2.22 |

| Matlab poisson2d | 28.19 | 3464.90 | 38859.00 | almost infinity |

| Matlab poisson2d_blk | 6.85 | 309.20 | 1912.10 | almost infinity |

| Matlab poisson2d_kron | 0.21 | 2.05 | 6.23 | 29.96 |

In the next experiment a linear system with the 2D-Poisson matrix is solved by the preconditioned conjugate gradient method using the Python code, a Matlab implementation and a native C implementation. The problems of equal size were identical such that the iterations proceeded equally in all three implementations.

| Size n | Python time | Native C time | Matlab time |

| 1002 | 1.12 | 0.96 | 8.85 |

| 3002 | 49.65 | 48.38 | 387.26 |

| 5002 | 299.39 | 288.67 | 1905.67 |

Regarding the calculation of electromagnetic fields, we have compared the performance of the old natively compiled code with the performance of the new mixed-language programming approach in [1, Section 9.6.1]. In our experiments, the natively compiled code was at most 20% faster. The overhead in the new implementation can be mainly attributed to Python function argument handling, slow Python file I/O and some interpreted mesh handling code that should be moved to a C extension module.

Python imposes no restriction on the size of the problems we can solve. In our biggest experiment a total of 18 GBytes of memory was used. The resulting sparse matrices had an order of 7.5 million and had up to 160 million non-zero elements, requiring up to 2.4 GBytes of memory. The Python code was running for about 40 hours to successfully compute the requested results.

We successfully applied the mixed-language programming approach based on Python and C to a large-scale scientific application computing electromagnetic fields in accelerator cavities. The new implementation based on PySparse and PyFemax is shorter, better maintainable, extensible and offers improved flexibility through scripting. The performance penalty introduced by Python is relatively small.

PySparse adds support for sparse matrices to Python and has been designed to be easy to use, applicable to a wide range of computational problems and also efficient in terms of memory and speed.

PySparse is already used in several other scientific projects: e.g. Oliver Bröker's WolfAMG [12], an object-oriented framework for building algebraic multigrid solvers, is based on PySparse.

PySparse and PyFemax are open source software packages and can be downloaded from http://www.inf.ethz.ch/personal/geus/pyfemax.